Entries submitted

C1- Entry by: Agency for Access to Public Information (AAIP)

C1- Entry by: Agency for Access to Public Information (AAIP)

Description of the initiative:

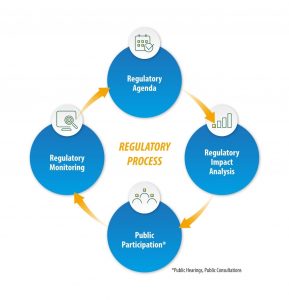

The ANPD structures its regulatory process in stages that ensure the legitimacy and effectiveness of its regulatory actions: preparation of the Regulatory Agenda, Regulatory Impact Assessment (RIA), internal consultations, public consultations, legal analysis, and Regulatory Outcome Evaluation (ROE). The cycle is completed with continuous monitoring of the regulated environment. Social participation is one of the pillars of the regulatory process. The Authority understands that the legitimacy of regulations depends on actively listening to society. Through instruments such as calls for contributions and public consultations, civil society, academia, the private sector, and other stakeholders actively contribute to the development of regulations. These contributions are handled with maximum transparency and are published along with the reasons for their acceptance or rejection. Just as public consultations allow society to submit feedback on draft regulations, calls for contributions are open consultations aimed at gathering information to support the drafting of new rules. From 2024 to the present, ANPD has conducted seven calls for contributions, receiving 431 submissions on topics such as anonymization and pseudonymization (74), data subject rights (52), high-risk processing (58), children and adolescents (86), Regulatory Agenda (37), artificial intelligence and review of automated decisions (124), and biometric data. The ANPD has also promoted webinars, events, lectures, and meetings to disseminate information and engage with society. The ANPD adopts RIA as both a legal requirement and an established practice to technically substantiate its regulations. It assesses regulatory alternatives, costs, benefits, and risks to ensure proportionate and effective interventions. Furthermore, rules are subject to ROE, allowing the measurement of actual regulatory impacts and continuous improvements. The Regulatory Agenda, published biennially, guides ANPD’s regulatory priorities, ensuring predictability, legal certainty, and transparency. The Authority periodically publishes monitoring reports on the Agenda, enabling social oversight and performance evaluation. The ANPD systematically monitors the regulated environment, identifying technological trends, new business models, and regulatory gaps, allowing to anticipate challenges, update its priorities, and keep the LGPD as a “living law,” responsive to innovation and social transformations.

Why the initiative deserves to be recognised by an award?

The ANPD’s regulatory model stands out for its innovative and transparent structure, which combines technical rigor with broad social participation. The Authority organizes its rulemaking process into clear and well-defined stages, ensuring legitimacy and effectiveness in its regulatory actions. 3 A key differentiator is the active engagement of civil society, academia, the private sector, and other stakeholders, which significantly strengthens trust in the regulatory process and ensures the development of inclusive and well-founded regulations. In addition, the ANPD maintains continuous monitoring of the regulated environment, anticipating challenges and adjusting its priorities in response to ongoing technological and social changes. This dynamic and participatory model positions the ANPD as an international reference in data protection governance, fully justifying its recognition by the Global Privacy Assembly Awards 2025.

C2- Entry by: Catalan Data Protection Authority (APDCAT)

C2- Entry by: Catalan Data Protection Authority (APDCAT)

Description of the initiative:

The FRIA Model has been designed to fulfil the obligations set out in Article 27 of the EU Artificial Intelligence Act with regard to high-risk AI systems. However, it can be used by any organisation involved in designing, developing or deploying AI systems to enable them to identify and mitigate risks to fundamental rights and freedoms.

The FRIA tool is structured into two main parts:

- The FRIA methodology and templates for conducting the Fundamental Rights Impact Assessments; and

- Use cases where this methodology has been applied. These cases demonstrate how the FRIA Model can be used in real-life AI projects to comply with the AI Act by providing an effective risk analysis and management that protects fundamental rights, in particular the right to data protection.

Regarding the FRIA template, it consists of the three main building blocks:

-

-

- a planning and scoping phase;

- a data collection and risk analysis phase; and

- a risk management phase.

-

The use cases included in the FRIA tool relate to AI systems in key areas listed in Annex III of the AI Act: education, workers’ management, access to healthcare and welfare services. This case-based empirical approach is crucial for testing the effectiveness of the proposed model in achieving the policy and design objectives of the FRIA, as set out by the EU legislator in the AI Act. The design of the FRIA Model and the use case experience emphasise the importance of involving internal experts throughout the assessment process. They also show that the assessment should be conducted by multidisciplinary teams comprising different professional profiles relating to AI, fundamental rights and risk management. The FRIA Model has been adopted by the Government of Catalonia (Generalitat de Catalunya), various Catalan institutions, the Croatian Personal Data Protection Authority, and the Basque Data Protection Authority.

Why the initiative deserves to be recognised by an award?

The Catalan FRIA Model is a practical tool, based on previous scientific studies on FRIA, that demonstrates through real-world cases how to do assess and mitigate the impact of AI on fundamental rights. It helps AI operators identify risks and implement measures to prevent/mitigate them, while ensuring accountability based on a clearly explicable model. To achieve these goals, the model operationalises abstract principles and translates them into variables and indices in order to address concrete case applications. This is a key element in building and consolidating knowledge and expertise in the field of artificial intelligence, enabling organisations to respond more effectively to the rapid evolution of AI systems. Finally, the model assists both AI providers and deployers in complying with regulatory requirements by promoting innovation while safeguarding individuals’ rights and freedoms.

C3- Entry by: Commission Nationale de l’Informatique et des Libertés (CNIL) – France

C3- Entry by: Commission Nationale de l’Informatique et des Libertés (CNIL) – France

Description of the initiative:

The AI how-to-sheets concern only the development phase of AI systems, not their deployment, where this involves the processing of personal data. They are limited to the processing of data subject to the GDPR. They aim at accompanying a large number of professionals with both legal and technical profiles (such as data protection officers, legal officers, legal professionals, AI practitioners, etc.).

These AI how-to sheets, adopted following a public consultation, with some of them being finalised, provide a framework to help professionals with their compliance. They recall the obligations imposed by GDPR and make recommendations to comply with them. These recommendations are not binding: data controllers may deviate from it, under their responsibility and provided that they can justify their choices. Some recommendations are also made as good practices and allow one to go beyond GDPR obligations.

The CNIL’s AI how-to sheets concern the development of systems implementing artificial intelligence techniques involving the processing of personal data. These are referred to as “AI systems”. The definition of AI systems covered by these how-to sheets is aligned with the definition of the EU AI Act.

The AI how-to-sheets are dealing with the following topics:

– Sheet 1: Determining the applicable legal regime

– Sheet 2: Defining a purpose

– Sheet 3: Determining the legal qualification of AI system providers

– Sheet 4 (1/2): Ensuring the lawfulness of the data processing – defining legal basis

– Sheet 4 (2/2): Ensuring the lawfulness of the data processing – in case of re-use of data, carrying out the necessary additional test and verifications

– Sheet 5: Carrying out a data protection impact assessment when necessary

– Sheet 6: Taking into account data protection when designing the system

– Sheet 7: Taking data protection into account in data collection and management

– Sheet 8 (1/2): Relying on the legal basis of legitimate interest to develop an AI system

– Focus 8 (2/2): The legal basis of legitimate interests: focus sheet on measures to implement in case of data collection by web scraping

– Sheet 9: Informing data subjects

– Sheet 10: Respect and facilitate the exercise of data subjects’ rights

– Sheet 11: Annotating data

– Sheet 12: Ensuring the security of an AI system’s development

– Sheet 13: Analysing the status of an AI model

Why the initiative deserves to be recognised by an award?

This initiative is particularly noteworthy because it offers an accompaniment tool to a large number of professionals developing AI systems with both legal and technical profiles.

Moreover, these AI how-to-sheets are developed through an inclusive process, thanks to public consultation, which allows to produce concrete recommendations responding to AI stakeholders’ concerns and questions.

The AI how-to-sheets therefore contribute to improving accountability of organisations developing AI systems, and in achieving transition from law to practice.

C4- Entry by: Croatian Personal Data Protection Agency

C4- Entry by: Croatian Personal Data Protection Agency

Description of the initiative:

Although the General Data Protection Regulation (GDPR) has been in force since May 2018, achieving full compliance remains a significant challenge, particularly for small and medium-sized enterprises (SMEs). To address these challenges, the Croatian Personal Data Protection Agency, in cooperation with its partners, developed Olivia: an innovative, open-source, user-friendly, and interoperable digital tool specifically designed to support SMEs throughout their GDPR compliance journey.

Olivia offers a comprehensive package of educational and practical resources. It includes fifteen data protection courses that address all key obligations of data controllers and processors as defined by the GDPR. Each course consists of both theoretical and practical components. In the theoretical part, users can explore lessons explaining specific GDPR obligations, view educational videos, and take quizzes to assess their knowledge. The practical modules provide data controllers with templates and tools to generate internal documentation that demonstrates compliance and accountability. Additionally, the Olivia platform hosts twenty webinars covering a range of data protection topics. These webinars are permanently accessible and free of charge to all interested stakeholders.

Olivia is a virtual teacher and assistant at the same time. Olivia contains a small online academy that offers to SMEs, but also to all data controllers, a series of learning modules to improve their knowledge in the field of personal data protection, and also serves as a practical tool to help organisations create internal documents to prove their compliance and accountability. It was successfully launched in 2024 and will be regularly updated to ensure its continued relevance and effectiveness. The Croatian DPA is now working on the development of modules on the interplay between GDPR and Artificial Intelligence.

To further support users, a detailed user manual, handbook, and an instructional video have been developed and uploaded to the Olivia platform to serve as a lasting educational resource. The “Olivia” digital tool has empowered SMEs, but also data protection officers across the EU, to improve GDPR compliance through user-friendly support, educational resources, and international collaboration. It enhances SMEs’ expertise, encourages a culture of privacy, and promotes EU-wide engagement through its open-source, multilingual design. Olivia is adaptable and scalable, enabling the seamless integration of new modules and language versions to support GDPR compliance across diverse national contexts.

Why the initiative deserves to be recognised by an award?

Olivia deserves recognition because it represents a pioneering, practical, and sustainable response to a genuine need among SMEs for GDPR compliance support. Despite being in force since 2018, the GDPR remains challenging, especially for smaller businesses with limited resources. Olivia bridges this gap through an open-source, interoperable, user-friendly digital tool that combines high-quality educational resources with practical compliance support, empowering SMEs to meet their legal obligations confidently and effectively.

The initiative goes beyond traditional training by offering fifteen structured data protection courses, practical templates to generate internal compliance documents, twenty permanently accessible webinars, and educational videos, all freely available in English. This innovative approach fosters a culture of privacy, strengthens the data protection ecosystem, and supports the consistent application of GDPR principles across various national contexts.

Moreover, Olivia promotes international cooperation and future-proofs its impact by enabling seamless integration of new modules. By combining education, practical tools, and international collaboration, Olivia sets a unique and replicable standard for raising awareness and improving compliance across the EU and wider. This makes Olivia truly worthy of recognition as an outstanding and innovative data protection initiative.

C5- Entry by: European Data Protection Supervisor

C5- Entry by: European Data Protection Supervisor

Description of the initiative:

In 2024, marking its 20th anniversary, the EDPS launched two strategic initiatives to enhance personal data breach management across EU institutions. These initiatives aimed to demystify the breach management process and promote a proactive, transparent, and accountable data protection culture. Both projects were designed in a modular format, allowing them to be adapted and reused in various institutional and international contexts.

The Data Breach Awareness Campaign, targeted at selected participants, was structured to assess existing breach management practices, identify critical areas, evaluate process implementation, and provide tailored recommendations. The project unfolded in four phases:

- Distribution of two structured questionnaires

- Data sorting and assessment

- Active cooperation and feedback

- Final reporting

A key deliverable was the assessment toolkit, developed by the EDPS to map three core pillars of effective data breach management against relevant data protection requirements. Information gathered during the survey phase fed the subsequent stages, enabling tailored analysis.

Each participant received a spider chart summarising strengths and weaknesses, along with concrete recommendations to improve compliance and accountability. Beyond individual assessments, the EDPS identified broader findings relevant across the EU institutional landscape.

The second initiative, PATRICIA (Personal dATa bReach awareness In Cybersecurity Incident hAndling), was a table-top exercise simulating a fictional personal data breach. Participants—including Data Protection Officers, IT managers, and Security Officers—were invited to respond collaboratively to the incident through three structured phases. This interactive scenario encouraged participants to apply their institution’s internal procedures, identify potential gaps, and reinforce interdepartmental cooperation. The exercise also served as a platform for peer learning and the exchange of best practices.

Together, these initiatives advanced the principles of accountability, data protection by design, and risk-based personal data breach management. By empowering institutions to respond to breaches more effectively and with greater awareness, the EDPS reaffirmed its commitment to protecting fundamental rights in the digital age.

Why the initiative deserves to be recognised by an award?

The EDPS considers that, through these initiatives, it provided practical and targeted guidance on personal data breach management to the participants, supporting them in understanding and fulfilling their legal responsibilities. The focus was placed on internal processes, while fostering a culture of transparency and accountability. Strengthening preparedness, empowering Data Protection Officers and IT professionals, and advancing a resilient and compliant data protection framework remain core objectives in safeguarding fundamental rights in the digital era. The overall outcome of the initiatives led to specific recommendations to facilitate compliance under data protection law. The EDPS received highly positive feedback on both projects.

Moreover, both projects are adaptable to a wide range of contexts and may be replicated by other stakeholders. The EDPS is able to provide supporting materials upon request. In particular, the assessment toolkit developed during these initiatives holds potential for further development, with the aim of transforming it into an automated tool accessible to all data controllers in future.

Notably, the PATRICIA project was repeated in 2025 with updated and enriched content, reflecting the continued value and impact of this initiative.

C6- Entry by: UK Information Commissioner’s Office (ICO)

C6- Entry by: UK Information Commissioner’s Office (ICO)

Description of the initiative:

The ICO’s Anonymisation and Pseudonymisation Guidance provides detailed data protection advice for organisations on how to carry out anonymisation and pseudonymisation in compliance with UK data protection law.

The guidance defines anonymisation as the process of removing personal identifiers so individuals can no longer be identified, directly or indirectly. It distinguishes this from pseudonymisation, where identifiers are replaced but the data can still be re-linked to individuals with additional information, kept separately. While anonymised data falls outside data protection law, pseudonymised data remains within its scope.

Key topics covered in the guidance include:

- how anonymisation and pseudonymisation applies across the UK GDPR and the Data Protection Act 2018, as well as other related legislation.

- how organisations must assess the likelihood of re-identification using the “motivated intruder” test, which considers whether an attacker could identify individuals using available resources and means reasonably likely to be used, considering cost, time to re-identify and the available technologies.

- how to choose appropriate anonymisation methods such as generalisation, aggregation, and differential privacy, and pseudonymisation techniques like tokenisation and encryption.

- providing guidance on the requirements for accountability, including assigning responsibility, maintaining documentation, and conducting regular reviews of anonymisation processes.

- providing guidance on sharing anonymised data safely with other parties, including working with third parties and ensuring transparency.

The guidance provides a risk-based, proportionate approach which can be applied to each organisation’s context. It also supports public authorities in complying with related legislation like the Freedom of Information Act when releasing anonymised datasets. The guidance also includes practical case studies that illustrate how organisations can apply the techniques discussed in real-world scenarios. These case studies were developed in collaboration with organisations that use anonymisation and pseudonymisation in innovative ways. While not prescriptive, these examples offer valuable insights into effective implementation.

Why the initiative deserves to be recognised by an award?

The ICO’s guidance on Anonymisation and pseudonymisation exemplifies how data protection authorities can operationalise accountability and embed it into organisational practice. This guidance provides practical steps for organisations to build robust internal frameworks to support lawful, transparent, and well-evidenced use of anonymised or pseudonymised data.

It encourages organisations to treat anonymisation as a risk management process, with clear roles and responsibilities, senior oversight, and formalised review mechanisms. The guidance highlights the importance of Data Protection Impact Assessments (DPIAs), internal audit trails, and staff training—ensuring that anonymisation decisions are documented, repeatable, and auditable.

This proactive approach aligns with the spirit of accountability found across global data protection frameworks: not merely to comply with the law, but to demonstrate that compliance is embedded in governance structures. By moving accountability from theory into practice, the guidance provides a model for regulators worldwide.

Through this initiative, the ICO has advanced the global understanding of how anonymisation can be governed effectively, responsibly, and transparently—making it a compelling candidate for recognition under the GPA Award’s Accountability category.

C7- Entry by: UK Information Commissioner’s Office (ICO)

Description of the initiative:

The ICO has been conducting data protection audits for over 20 years, assessing organisations’ compliance with data protection legislation and alignment with best practice, to assist organisations to improve their data protection practices. The ICO has now leveraged this knowledge and expertise by publishing its audit toolkits, in the form of the Data Protection Audit Framework, to equip organisations with a powerful tool to better manage and improve their data protection compliance and, ultimately, benefit data subjects. The ICO’s Data Protection Audit Framework consists of nine audit toolkits, based upon the toolkits that the ICO uses when conducting its own compliance audits of organisations, covering the following areas: Accountability, Records management, Information and Cyber Security, Training and Awareness, Data Sharing, Requests for Data, Personal Data Breach Management, Artificial Intelligence, and Age-Appropriate Design.

Each toolkit consists of:

- Some of our audit “control measures”. These are examples of measures that organisations should have in place to manage data protection risks and ensure they are effectively complying with data protection law.

- A list of ways in which they can meet the ICO’s expectations in relation to each of the “control measures”. The toolkits list the most likely ways to meet ICO expectations.

- Additional options to consider based on examples of good practice we’ve seen during our audits.

We suggest that organisations start with the Accountability toolkit to assess their accountability measures. This toolkit supports the foundations of an effective privacy management programme. The other eight toolkits take a more in depth look into specific areas of data protection law and allow the organisation to audit their compliance in more detail. To further help organisations to audit, report and improve on their data protection compliance each toolkit is supported by a downloadable tracker which helps the 3 organisation to conduct the assessment and then to track any actions that they plan to take to address any areas that are in need of improvement.

Why the initiative deserves to be recognised by an award?

The ICO has taken an innovative approach to improve organisations’ accountability by creating the Data Protection Audit Framework, an audit tool that allows them to manage their own data protection compliance, enabling them to identify and focus on areas requiring improvement and make better investment decisions. This ultimately benefits data subjects by ensuring that their information is better protected and more secure. This approach allows the ICO to reach a wider audience than it would be otherwise be able to do by carrying out audits with its own resources and allows more organisations to benefit from the ICO’s audit expertise. The Data Protection Audit Framework has been well received with thousands of visitors accessing the webpages since they were launched and with positive feedback received from users.

C8- Entry by: UK Information Commissioner’s Office (ICO)

C8- Entry by: UK Information Commissioner’s Office (ICO)

Description of the initiative:

The Information Commissioner’s Office (ICO) developed guidance on consumer Internet of Things (IoT) products and services in response to the widespread adoption of connected devices in UK households. While these technologies offer convenience and innovation, they also pose significant data protection challenges due to their capacity for continuous and large-scale data collection in environment where people have the biggest expectation of privacy.

Recognising the potential for non-compliance in the IoT market, as highlighted by civil society and academic research, the ICO sought to proactively support both consumers and industry. To ensure the guidance was grounded in real-world concerns, the ICO conducted consumer research using a citizen jury approach. This participatory method allowed everyday users to share their experiences, expectations, and concerns about IoT privacy, shaping the direction and content of the guidance.

The resulting guidance provides clear, actionable advice for manufacturers and service providers, particularly in four key areas:

- How to obtain valid user consent;

- How to deliver effective privacy information;

- How to uphold individual rights; and

- How to design for multiple users of a single device.

To enhance usability, the guidance includes visual examples of IoT interfaces, ranging from small screens and mobile apps to voice and sound-based systems, demonstrating how to meet legal requirements for transparency and consent in each context. By combining regulatory certainty with practical design solutions, the ICO’s initiative helps organisations build privacy into IoT products from the outset, fostering trust and compliance in a complex IoT ecosystem.

Why the initiative deserves to be recognised by an award?

The draft guidance for consumer IoT products and services exemplifies innovation in data protection regulation by addressing one of the most complex and fast-evolving areas of consumer technology. The ICO’s guidance stands out for its user-centred development process, incorporating insights from a citizen jury to ensure the final product reflects real consumer needs and expectations. It also breaks new ground in regulatory communication by using visual design examples tailored to the unique constraints of IoT interfaces. This practical approach bridges the gap between legal requirements and technical implementation, making data protection compliance more accessible for developers and manufacturers. At a time when connected devices are becoming ubiquitous, this guidance provides a timely and essential tool for safeguarding people’s rights. It not only supports industry compliance but also empowers consumers by promoting transparency and accountability in the IoT products they use every day.

C9- Entry by: International Enforcement Working Group

Description of the initiative:

The Concluding joint statement on data scraping (the Concluding Statement) builds on engagement with some of the world’s largest social media companies after issuing the Joint statement on data scraping and the protection of privacy (the Initial Statement) in 2023. The Concluding Statement provides additional guidance to help companies ensure that personal information of their users is protected from unlawful scraping.

The Initial Statement is an enforcement cooperation action led by members of the GPA International Enforcement Working Group (IEWG) to protect the vast amounts of personal data accessible online. Following its release in 2023, co-signatories continued their engagement with six of the world’s largest social media companies. This engagement resulted in fruitful dialogue with several key industry players allowing for better understanding of data scraping.

Based on this work, co-signatories released in October 2024 the Concluding Statement that highlights the authorities’ expectations for organizations that host publicly available personal information, including to:

- Comply with privacy and data protection laws when using personal information, including from their own platforms, to develop artificial intelligence large language models;

- Deploy a combination of safeguarding measures and regularly review and update them to keep pace with advances in scraping techniques and technologies; and

- Ensure that permissible data scraping for commercial or socially beneficial purposes is done lawfully and in accordance with strict contractual terms.

The Concluding Statement was endorsed by 16 privacy enforcement authorities from six continents:

- Agency for Access to Public Information, Argentina

- Office of the Australian Information Commissioner, Australia

- Office of the Privacy Commissioner of Canada

- Superintendencia de Industria y Comercio, Colombia

- Office of the Data Protection Authority, Guernsey

- Office of the Privacy Commissioner for Personal Data, Hong Kong, China

- Privacy Protection Authority, Israel

- Jersey Office of the Information Commissioner, Jersey

- National Institute for Transparency, Access to Information and Personal Data Protection, Mexico

- Commission de Contrôle des Informations Nominatives, Monaco

- Commission Nationale de contrôle de la protection des Données à caractère Personnel, Morocco

- Office of the Privacy Commissioner, New Zealand

- Datatilsynet, Norway

- Agencia Española de Protección de Datos, Spain

- Federal Data Protection and Information Commissioner, Switzerland

- Information Commissioner’s Office, United Kingdom

Why the initiative deserves to be recognised by an award?

The Concluding joint statement on data scrapping (the Concluding Statement) is a successful collaborative compliance initiative of the IEWG leading to effective engagement with social media companies and where attention was drawn to an important and timely issue.

The Concluding statement demonstrates the value of informal compliance actions showing that by working together, data protection authorities (DPAs) can expand their capacity and amplify their impact for protection of privacy and personal data. It is a concrete example of how DPAs can not only cooperate through formal joint investigations but can also alternatively cooperate on less resource-intensive soft enforcement actions.

This initiative highlights also the importance of collaboration between DPAs and industry as it enabled parties to examine issues related to data scraping. This resulted in a deepened understanding by DPAs of the challenges that organizations face in protecting against unlawful scraping, including increasingly sophisticated scrapers, ever-evolving advances in scraping technology, and differentiating scrapers from authorized users.

This engagement led to social media companies generally indicating to DPAs that they have implemented many of the measures from the initial statement as well as further ones to better protect against unlawful data scraping.

C10- Entry by: National Privacy Commission

C10- Entry by: National Privacy Commission

Description of the initiative:

To support the effective implementation of the Data Privacy Act of 2012 (DPA), the National Privacy Commission (NPC) issues Circulars that prescribe, specify, or define policies and procedures designed to supplement and clarify the provisions of the DPA and its Implementing Rules and Regulations (IRR). These Circulars are intended to ensure effective implementation and enforcement of the DPA and its IRR, applying to all covered individuals or organizations, or to specific sectors.

Additionally, the NPC also issues Advisories that serve as a guide or recommendation on the interpretation of the provisions of the DPA and its IRR and best practices relating to the processing of personal data in compliance with the Act and its IRR applicable to all covered natural or judicial persons, or to a particular sector.

These circulars and advisories address emerging technologies, sector-specific practices, and fundamental principles of data privacy. They guide organizations in implementing accountability across all stages of personal data processing.

Foundational policies, such as those outlining the principles of consent, the concept of legitimate interest, data sharing agreements, and the implementation of security measures, serve as essential frameworks that offer comprehensive guidance for organizations. These policies help ensure compliance with legal and regulatory requirements and demonstrate accountability.

Meanwhile, advisories such as those on AI systems, child-oriented transparency, and deceptive design patterns reflect the NPC’s stance on emerging privacy challenges. Model clauses for cross-border data transfers also help organizations maintain accountability beyond national borders. Recent circulars, such as those on body-worn cameras and CCTV systems, emphasize the need for proportionality, transparency, and security in surveillance-related data collection.

Collectively, these issuances form a comprehensive framework intended to enable both public and private entities to establish robust and effective data governance structures. This ensures that accountability becomes not merely a policy, but a practice embedded in their operations, fostering a culture of accountability that permeates throughout the organization.

Why the initiative deserves to be recognised by an award?

In response to the rapidly evolving digital landscape and growing concerns over personal data protection, the NPC has remained steadfast in its commitment to strengthening data privacy standards and measures. This involves developing policies that help organizations be more accountable.

The NPC also emphasizes the importance of involving stakeholders in the development of policies. This includes soliciting public input during the drafting stage and conducting consultations to ensure that the policies address the challenges faced by entities implementing the Data Privacy Act. This collaborative process encourages organizations to adhere to these policies because they have been developed with broad input and scrutiny, increasing commitment to compliance and accountability in implementing data protection measures.

By outlining best practices and regulatory requirements, these policies issued by the NPC aim to ensure that organizations not only comply with legal obligations but also uphold ethical standards in data processing. They cover critical aspects such as data collection, storage, usage, and sharing, emphasizing the importance of transparency, consent, and safeguarding individuals’ privacy rights. Through these resources, organizations can enhance their data handling processes and cultivate a culture of accountability in all facets of personal data processing.

C11- Entry by: Office of the Information and Privacy Commissioner of Ontario

C11- Entry by: Office of the Information and Privacy Commissioner of Ontario

Description of the initiative:

The Privacy Management Handbook for Small Health Care Organizations (handbook) helps individual practitioners and small health care organizations set up a privacy management framework that is right-sized for their practice. Using this handbook can help identify potential gaps or weaknesses in information management practices that could be continually strengthened to better protect personal health information and preserve patients’ trust.

The Handbook summarizes basic requirements and best practices under Ontario’s health privacy law (PHIPA) in a way that is easy to access and understand. The handbook is filled with practical tips and key takeaways, links to additional resources, and helpful tools and templates that health providers can lift and adapt for their own purposes.

Specifically, the handbook gives tips and tools for success, including:

- information on what one needs to do to meet basic PHIPA requirements

- tips and guidance to help you build a privacy management program that’s right-sized for any individual practice

- additional resources that may be useful for those who want more detail

This handbook is designed primarily for:

- sole health practitioners who independently own and operate their own health care practice

- small group clinics that provide similar or interdisciplinary health care services

- operators of small health care facilities

The handbook responds to a long-expressed need of these small health providers to have access to more practical, operational guidance that they can feasibly implement given their small size and real budgetary constraints.

In short, the IPC created reader-friendly content for the busy health practitioner who is not a privacy specialist and needs ready access to highly practical information that can be understood, feasibly implemented and continually improved upon over time.

Throughout the handbook we use plain, easy-to-understand language to keep the information simple and straightforward. This is uniquely distinct from other existing PHIPA-related guidance that has traditionally been aimed at larger health institutions, including large hospitals and sophisticated health research institutions.

Why the initiative deserves to be recognised by an award?

Ultimately, building good privacy management practices that demonstrate both compliance with PHIPA and responsive care for patients is essential for maintaining public trust in the health system.

As a modern regulator that aims to have real-world impact, we recognize that it may not always be possible to ramp up to a highly-sophisticated privacy and accountability management framework from one day to the next. This is why we created this practical IPC handbook to support smaller health providers by providing them with a solid base to start from and accompany them along their journey of building more mature privacy programs over time.

The guidance material represents an innovative means by which the IPC is responding to different needs of different entities in the health sector. This unique resource supports the regulatory compliance efforts of small health providers who do not have the resources of larger organizations, yet are expected to comply with complex legislative requirements nonetheless.

The Handbook is intended to encourage them by giving them the tools they need to start a basic privacy management program and work towards achieving greater privacy maturity over time, rather than feel overwhelmed in a helpless state of non-compliance due to lack of resources.

C12- Entry by: Office of the Information and Privacy Commissioner of Ontario

C12- Entry by: Office of the Information and Privacy Commissioner of Ontario

Description of the initiative:

The IPC developed guidance for third-party contracting practices that addresses the privacy, security and transparency concerns associated with outsourcing. We drew inspiration from past findings and decisions of our tribunal and consulted extensively across different sectors throughout the province to get their practical input.

Organizations can outsource data and services, but not accountability. We encourage organizations to use this guidance to better understand the privacy and accountability obligations that continue to rest with them, even when they contract out certain data processing functions.

The IPC’s guidance is intended to help organizations exercise due diligence and demonstrate the measures they have taken to ensure that privacy and security issues are addressed throughout the procurement process, from planning right up to and including agreement termination.

The guidance document is organized according to five key stages of procurement:

- Part 1: Procurement planning outlines considerations when identifying and evaluating business needs and planning to engage a service provider.

- Part 2: Tendering outlines considerations when developing or reviewing tendering documents, such as requests for proposals.

- Part 3: Vendor selection outlines considerations for access and privacy during the evaluation and award stages of the procurement process.

- Part 4: Agreement outlines considerations when entering into an agreement with a service provider.

- Part 5: Agreement management and termination outlines considerations for the duration of an agreement related to its management, monitoring, enforcement and termination.

This third-party guidance supports our strategic priority of Privacy and Transparency in Modern Government. Our goal is to advance Ontarians’ privacy and access rights by working with public institutions to develop bedrock principles and comprehensive governance frameworks for the responsible and accountable deployment of digital technologies.

This contracting guidance helps organizations exercise due diligence and maintain accountability throughout the entire procurement process. It promotes responsible and transparent deployment of digital technologies by public sector institutions, even when relying on private sector partners to process personal information on their behalf.

While institutions may have all the right contractual provisions in place, without robust due diligence practices throughout the procurement process, they may overlook significant risks and fail to take necessary measures to mitigate them.

Why the initiative deserves to be recognised by an award?

Many jurisdictions have contracting guidance and model service agreements, but contracts are no longer enough. Outsourcing must now be supported by robust due diligence and oversight practices to uphold the highest standards of accountability throughout the entire procurement process.

Timely: Today’s outsourcing practices and complex data flows are blurring lines of custody and control. More than 35% of data breaches globally involve third-party suppliers or vendors. These breaches can damage reputation and trust –and highlight the need for robust third-party risk management programs.

Unique: Each year, Ontario public sector institutions spend $29 billion on goods and services, including to process personal data. Yet, there is little current guidance available to help them meet their privacy obligations. In response, our guidance establishes a standard for due diligence, oversight, and effective governance.

Useful: Based on best practices, our guidance covers all stages of procurement and is written in clear and accessible language. It aligns with existing Ontario government procurement directives and response by regulated entities has been highly positive to date.

Universal: Our guidance was designed for use by the Ontario public sector but can be adapted to meet the needs of any organization, public or private, in any jurisdiction.

C13- Entry by: Office of the Privacy Commissioner for Personal Data, Hong Kong, China (PCPD)

C13- Entry by: Office of the Privacy Commissioner for Personal Data, Hong Kong, China (PCPD)

Description of the initiative:

Since 2021, the PCPD has been proactive in addressing the privacy risks arising from the development and use of AI by publishing a series of guidance materials aimed at both organisations and the public.

In 2021, the PCPD issued the “Guidance on the Ethical Development and Use of Artificial Intelligence” (“Guidance”) to help organisations understand and comply with the relevant requirements of the Personal Data (Privacy) Ordinance (“PDPO”). The Guidance recommends three Data Stewardship Values—being respectful, beneficial, and fair—and sets out seven Ethical Principles for AI, namely accountability, human oversight, transparency and interpretability, data privacy, fairness, beneficial AI as well as reliability, robustness and security.

In 2024, as the use of AI systems developed by third parties became common, the PCPD published the “Artificial Intelligence: Model Personal Data Protection Framework” (“Model Framework”) to assist organisations in complying with the relevant requirements of the PDPO when procuring, customising, implementing, and using AI systems. The Model Framework provides recommendations in four areas: establishing AI strategy and governance, conducting risk assessments and human oversight, customising AI models and implementing and managing AI systems, and communicating and engaging with stakeholders. The Model Framework won the “Hong Kong Public Sector Initiative of the Year – Regulatory” award in the Asia Pacific GovMedia Conference & Awards 2025.

In March 2025, the PCPD published the “Checklist on Guidelines for the Use of Generative AI by Employees” (“Guidelines”) to assist organisations in developing internal policies or guidelines on the use of generative AI by employees. The Guidelines cover areas such as scope of permissible use of generative AI, protection of personal data privacy, lawful and ethical use and prevention of bias, and data security.

Apart from organisations, the public has increasingly used AI in their daily lives. Thus, in 2023, the PCPD published the “10 TIPS for Users of AI Chatbots” leaflet to help the public use AI chatbots safely while protecting their personal data privacy. The leaflet covers important considerations for users during registration and communication with AI chatbots, as well as tips for safe and responsible use.

Why the initiative deserves to be recognised by an award?

As organisations are eager to unlock the potential of AI while navigating complex compliance landscapes, the PCPD recognised that many orgasniastions are not lacking in commitment to personal data privacy, but are often constrained by limited resources and technical know-how.

To bridge this gap, we have developed a suite of practical, accessible resources that show how innovation can thrive without compromising privacy.

Our publications offer clear, actionable guidance aligned with general business processes, ensuring seamless integration with the organisations’ business processes. Understanding the need for simplicity, we created concise pamphlets on the Guidance and Model Framework and designed the Guidelines in the form of a Checklist to distil key recommendations into digestible formats, making adoption feasible for organisations of all technical capacities.

We also acknowledged the importance of enhancing the privacy awareness of individual users, so we empower the users by issuing practical tips for safe AI chatbot use to help them safeguard their privacy in everyday interactions.

This initiative demonstrates that safe, secure and privacy-friendly AI adoption is not only possible but practical.

Recognition through this award would amplify this message globally: that responsible innovation is entirely possible as the path forward as we embrace the opportunities and challenges brought by AI.

C14- Entry by: Privacy Protection Authority of Israel

Description of the initiative:

The Israeli Privacy Protection Regulations (Data Security), 5777-2017 st ipulate obligations and actions that a database controller, its processor and its manager are required to perform in order to fulfill their responsibility under Israel’s Privacy Protection Law, 5741-1981, regarding the security of personal data in the database. These detailed Regulations are Israel’s main data security legislation. They are promulgated under the Privacy Protection Law and are enforceable by the Privacy Protection Authority. While the regulations specify the company’s duties regarding data security, they do not explicitly determine which corporate organ is responsible for carrying them out. In September 2024, the Privacy Protection Authority (“PPA”) issued its “Guidance on the Role of the Board of Directors in Carrying out Corporate Obligations under the Privacy Protection Regulations (Data Security)”. According to the Guidance, in companies in which the processing of personal data is at the core of their activity or their activity creates an increased risk to privacy, the board of directors should be significantly involved in carrying out the following obligations, and conduct a discussion regarding the following matters:

ipulate obligations and actions that a database controller, its processor and its manager are required to perform in order to fulfill their responsibility under Israel’s Privacy Protection Law, 5741-1981, regarding the security of personal data in the database. These detailed Regulations are Israel’s main data security legislation. They are promulgated under the Privacy Protection Law and are enforceable by the Privacy Protection Authority. While the regulations specify the company’s duties regarding data security, they do not explicitly determine which corporate organ is responsible for carrying them out. In September 2024, the Privacy Protection Authority (“PPA”) issued its “Guidance on the Role of the Board of Directors in Carrying out Corporate Obligations under the Privacy Protection Regulations (Data Security)”. According to the Guidance, in companies in which the processing of personal data is at the core of their activity or their activity creates an increased risk to privacy, the board of directors should be significantly involved in carrying out the following obligations, and conduct a discussion regarding the following matters:

- The database definitions document that the company is required to prepare.

- The main principles of the company’s data security procedure.

- The results of the risk evaluation, penetration tests, and the periodic audit reports on compliance with the regulations, which must be held according to the security level of the database under the Regulations, and regarding data breaches that occurred in the company.

Moreover, in companies for which the guidance applies, the board is obligated to oversee the company’s compliance with the Privacy Protection Law and its regulations. As part of this duty of care, the board is required to ensure the existence of a policy addressing the effective monitoring, control, and compliance procedures, such as an internal compliance program, and the use and management of personal data on material matters. As set forth in the Guidance, a company in which the board does not fulfill this duty of care, or is not sufficiently involved in carrying out the specific obligations prescribed in the Guidance is allegedly in violation of the Privacy Protection Law and the Data Security Regulations. As such, it may be exposed to sanctions under the Privacy Protection Law, 3 including significant fines introduced in Amendment No. 13 of the Privacy Protection Law, that was enacted in August 2024, and will enter into force in August 2025.

Why the initiative deserves to be recognised by an award?

The Guidance issued by the Israeli Privacy Protection Authority (PPA) in 2024 sets a ground breaking precedent by embedding data protection accountability at the highest level of corporate governance—the board of directors. This aligns with global trends emphasizing top-down responsibility in privacy governance, but goes further by formalizing the role of the board in overseeing compliance with privacy and data security obligations.

- Elevating Accountability to the Board

The Guidance is a milestone in transforming data protection accountability into a concrete and enforceable governance duty, explicitly placing responsibility for data protection oversight on the board.

- Promoting Proactive and Measurable Oversight

The Guidance sets out specific issues the board must engage with, including:

- Reviewing the Database Definitions Document,

- Overseeing the Data Security Procedure,

- Discussing results of risk assessments, penetration tests, and audits,

- Discussing data breaches,

- Ensuring an internal compliance program is in place.

By mandating deliberations by the board on these defined items, the Guidance provides a clear accountability framework with traceable, auditable steps.

- Risk-Based and Role-Based Approach

Recognizing that not all companies pose the same privacy risk, the Guidance applies these obligations to companies whose core activity involves processing personal data or whose activities generate an increased risk to privacy. This scalable application reflects a nuanced and risk-based approach to the board’s accountability.

- Encouraging Internal Culture of Data Protection Compliance

In a landscape where ambiguity around corporate privacy obligations can lead to inaction, the Guidance offers operational direction. By making board-level involvement an explicit expectation, it enhances internal compliance cultures and strengthens the connection between corporate governance, data protection and data security principles.